Recent advances have enabled diffusion models to efficiently compress videos while maintaining high visual quality. By storing only keyframes and using these models to interpolate frames during playback, this method ensures high fidelity with minimal data. The process is adaptive, balancing detail retention and compression ratio, and can be conditioned on lightweight information like text descriptions or edge maps for improved results.

Introduction

The rapid growth of digital video content across various platforms necessitates more efficient video compression technologies. Traditional compression methods struggle to balance compression efficiency with high visual fidelity. This blog post discusses a novel approach using diffusion-based models for adaptive video compression, showcasing substantial improvements in efficiency and quality.

Traditional Compression Challenges: Standard compression algorithms, like H.264/AVC, H.265/HEVC, and the newer H.266/VVC, primarily focus on reducing spatial and temporal redundancies. However, they often fall short when dealing with high-quality streaming requirements, particularly for content with complex textures or fast movements.

Breakthrough with Diffusion Models: Diffusion models, originally designed for image synthesis, have demonstrated potential in enhancing video compression. These models capture intricate image patterns, offering a paradigm shift from predictive to conditional frame generation.

Theoretical Framework

This section delves into the mathematical foundations and technical descriptions of how diffusion models are applied to video compression.

Diffusion for Frame Generation: Diffusion models perform a forward process where noise is progressively added to the video frames, and a reverse process where this noise is removed to reconstruct the original video content, hence allowing for efficient frame prediction.

Video Compression via Diffusion: The core idea is to use the generative capabilities of diffusion models to predict future frames based on past frames, significantly reducing the need to transmit every frame.

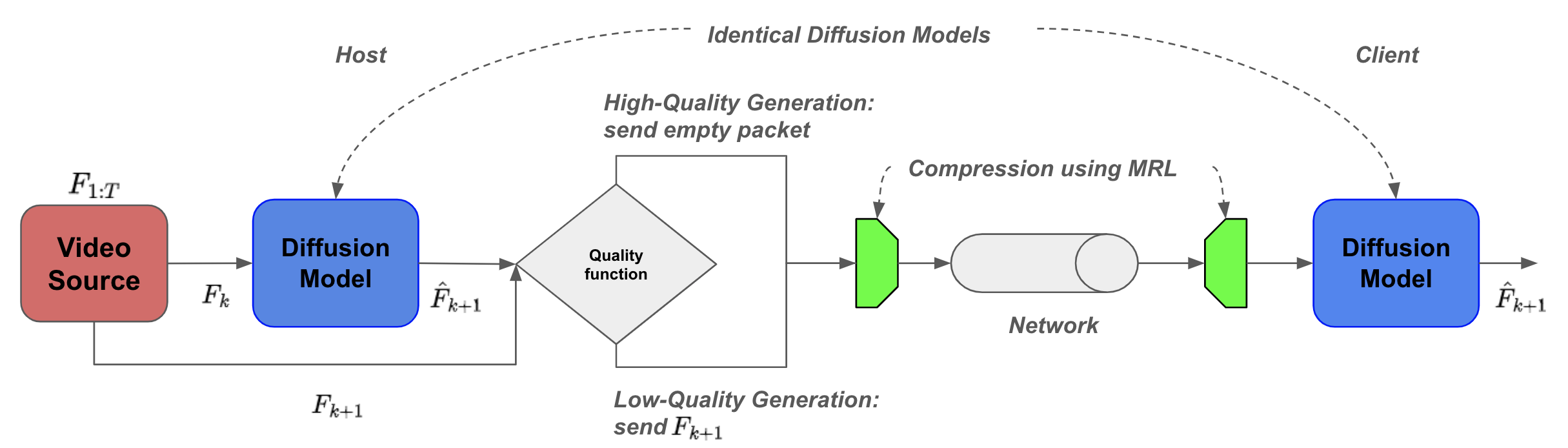

Figure 1: Main Design Architecture: A video streaming pipeline using our method: Identical diffusion models are placed at a host and client; a quality function to govern frames to send downstream; and compression using Matryoshka Representation Learning (MRL). Combining all these design features significantly increase reconstruction quality and reduce compression size

Figure 1: Main Design Architecture: A video streaming pipeline using our method: Identical diffusion models are placed at a host and client; a quality function to govern frames to send downstream; and compression using Matryoshka Representation Learning (MRL). Combining all these design features significantly increase reconstruction quality and reduce compression size

Methodology

We introduce a unique approach that combines traditional video compression techniques with the advanced capabilities of diffusion models.

Adaptive Video Compression Using MCVD: Our method employs the Masked Conditional Video Diffusion (MCVD) model, which facilitates the generation of high-quality frames from masked past and future frames. This allows for dynamic adjustment of compression based on frame quality.

Integration of Matryoshka Representation Learning: To enhance compression further, we incorporate Matryoshka Representation Learning (MRL), which optimizes the encoding space and significantly reduces the size of data packets transmitted across networks.

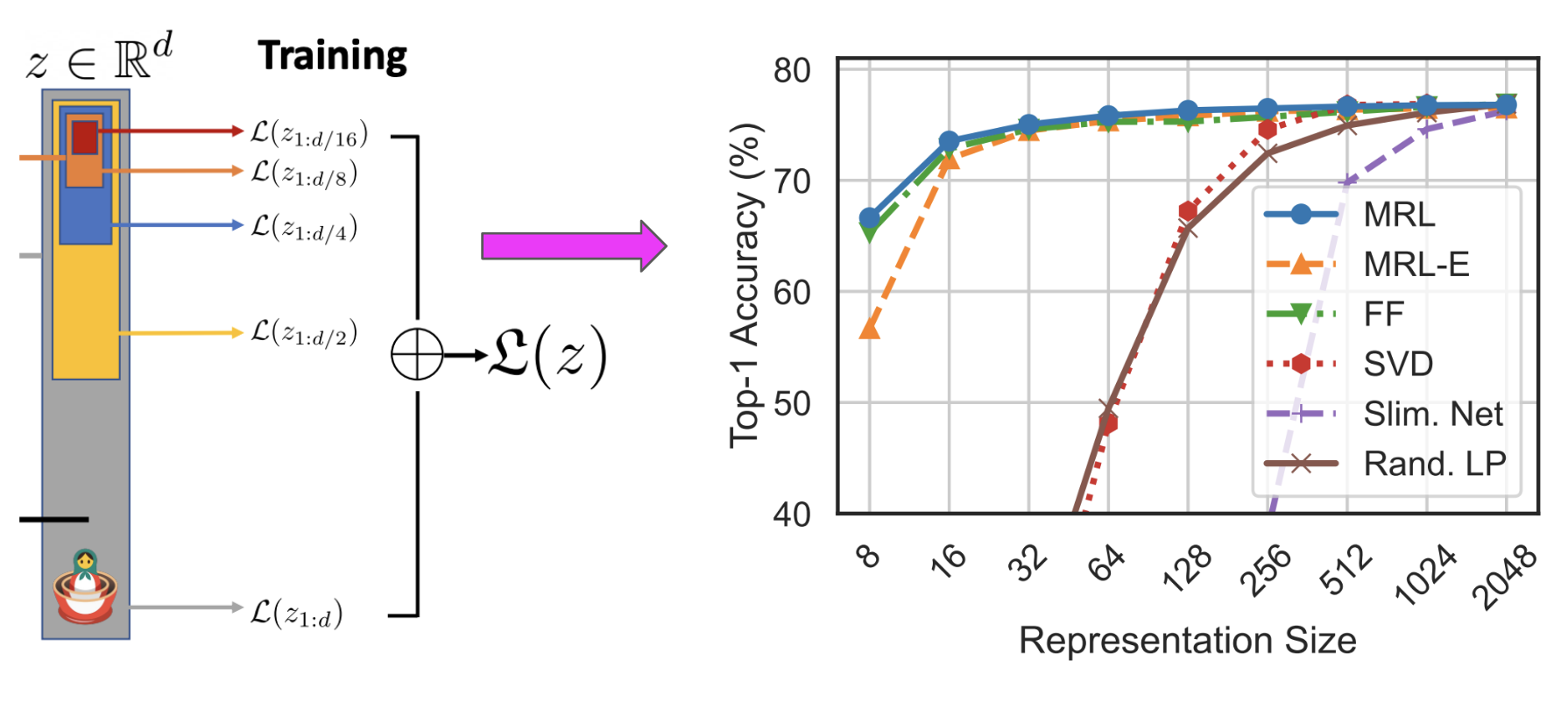

Figure 2: Matryoshka Representation Learning: Identical losses are calculated for each chunk of the embedding and then summed up (as shown on the left). This leads to better size-to-performance ratio on classification tasks (as shown on the right).

Figure 2: Matryoshka Representation Learning: Identical losses are calculated for each chunk of the embedding and then summed up (as shown on the left). This leads to better size-to-performance ratio on classification tasks (as shown on the right).

Experimental Validation

We conducted several experiments to validate the effectiveness of our proposed method against traditional compression standards.

Experimental Setup: The experiments were carried out using a dataset from the Kodak Image Suite, with metrics such as Bits Per Pixel (BPP) and Peak Signal to Noise Ratio (PSNR) used to evaluate performance.

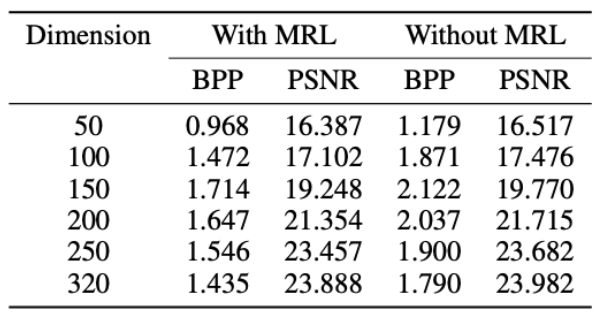

Figure 3: Extreme image compression on Kodak images: Our method can reconstruct the image with much smaller bpps on a universal test image, Kodak.

Figure 3: Extreme image compression on Kodak images: Our method can reconstruct the image with much smaller bpps on a universal test image, Kodak.

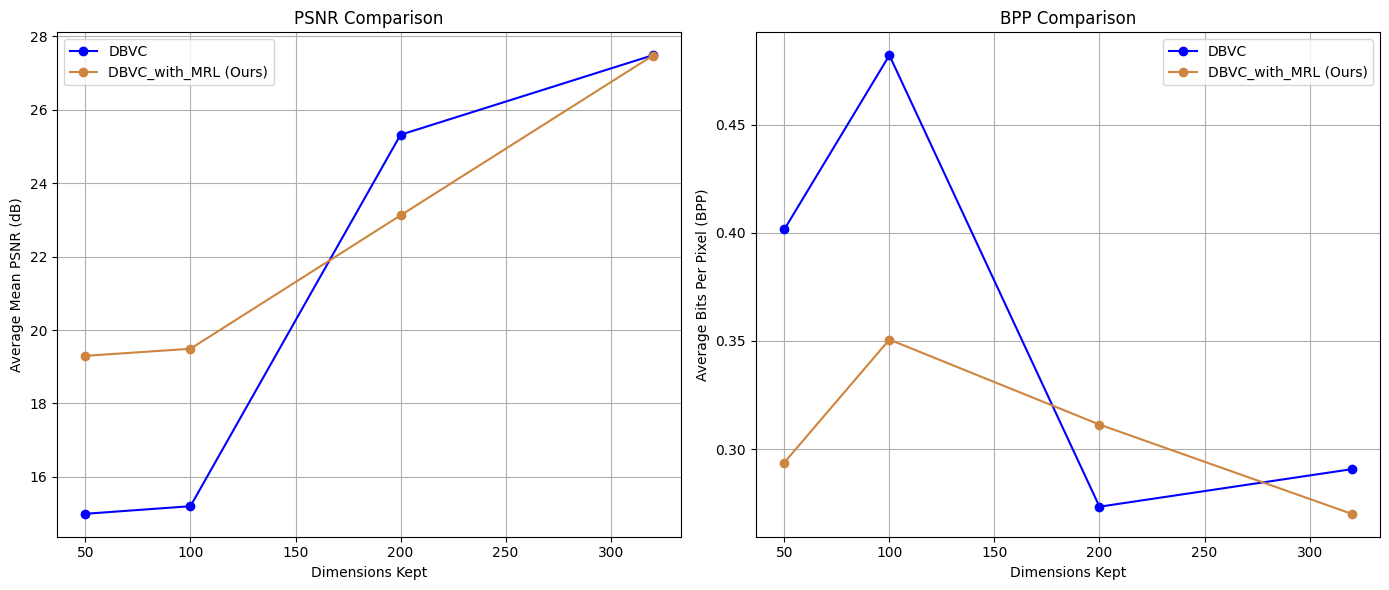

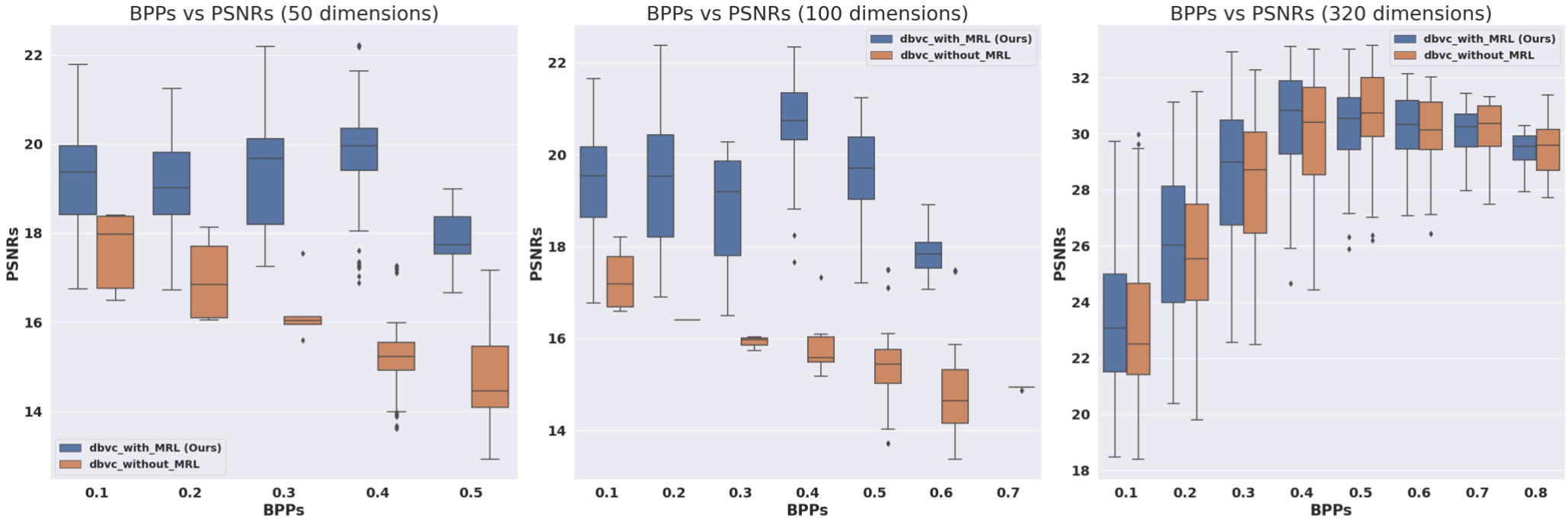

Results: Our results, seen in Figure 3, exemplify MRL improves the image compression without loss of reconstructive quality. We use the same NIC model in our video streaming application, where due to limited infrastructure, we use a pre-trained MCVD diffusion model trained on a Cityscape dataset. Our method shows comparable video reconstruction performance, measured in PSNR, with lower bits-per-pixel (BPP)s, signifying greater compression without performance loss. Our main results are shown in Figure 4 and Figure 6.

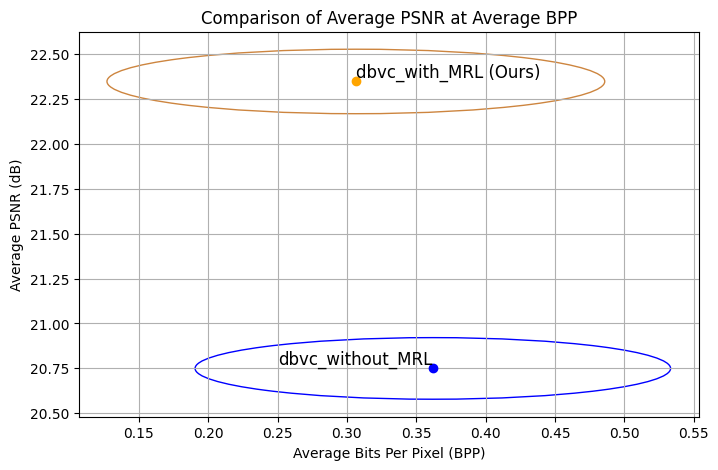

Figure 4: Extreme video compression with higher performance: Our method has a higher compression rate with better performance than the benchmark. Colored circles represent variance around the mean values for 40 sample videos, with blue for the benchmark and orange for our method.

Figure 4: Extreme video compression with higher performance: Our method has a higher compression rate with better performance than the benchmark. Colored circles represent variance around the mean values for 40 sample videos, with blue for the benchmark and orange for our method.

Figure 5: Better video reconstruction with a higher compression rate: Given small embedding dimensions, 50 and 100, our method can reconstruct better video with smaller bpps.

Figure 5: Better video reconstruction with a higher compression rate: Given small embedding dimensions, 50 and 100, our method can reconstruct better video with smaller bpps.

We first compare our method to DBVC with respect to the size of the dimension. Our experiment shows that given a small dimension, our method can have much higher PSNRs and lower BPPs, as shown in Figure 5. Our results also illustrate that our method has a higher compression rate due to MRL reducing the encoded vector size, which induces a lower BPP.

Next, we compare the overall performance of our method against the benchmark, DBVC, through the BPP and PSNR metrics.

Our method has an ~8% higher average PSNR than the benchmark for the same compression rate, shown in Figure 4. Upon careful examination, we can see improvements of nearly 30% for smaller dimensions and larger BPPs.

Figure 6: Ablation across encoding dimension size: Our method outperforms the baseline for lower encoded dimension size at the cost of a slightly reduced PSNR.

Figure 6: Ablation across encoding dimension size: Our method outperforms the baseline for lower encoded dimension size at the cost of a slightly reduced PSNR.

Conclusion

The integration of diffusion models into video compression marks a significant advancement in multimedia technologies. By leveraging these models, we can significantly reduce the bandwidth required for high-quality video streaming. The next steps include optimizing these models for real-time processing and exploring their applications in live video feeds.