Thayne T. Walker, Jaime S. Ide, Minkyu Choi, Michael John Guarino, and Kevin Alcedo.

International Conference on Control, Decision and Information Technologies (CoDit), 2023

The coordination of multiple autonomous agents is essential for achieving collaborative goals efficiently, especially in environments with limited communication and sensing capabilities. Our recent study, presented at CoDIT 2023, explores a novel method to tackle this challenge. We introduce Multi-Agent Reinforcement Learning with Epistemic Priors (MARL-EP), a technique that leverages shared mental models to enable high-level coordination among agents, even with severely impaired sensing and zero communication.

Problem Definition

Imagine a scenario where multiple autonomous agents need to navigate from their respective starting positions to specific goal locations. The challenge intensifies when these agents cannot communicate with each other and have limited sensing capabilities, leading to potential collisions or inefficient goal achievement. This problem is prevalent in various applications, including warehouse logistics, firefighting, surveillance, and transportation.

Motivating Example

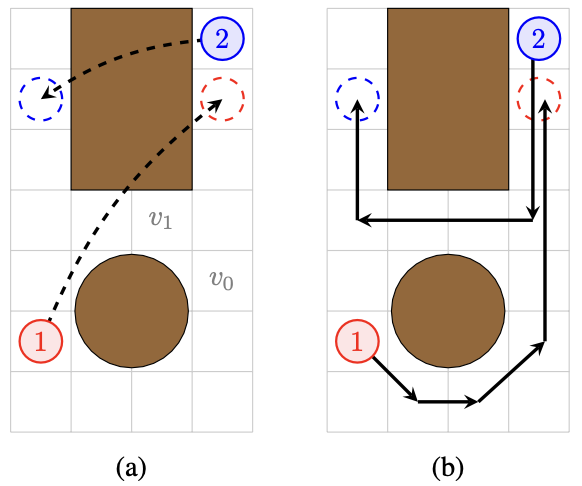

Consider a cooperative navigation problem where agents must move from their start states to goal states without colliding with each other or obstacles. Traditional methods rely on accurate state information and communication, but what happens when these are unavailable? Figure 1 in our paper illustrates such a scenario where two agents must navigate to their goals without knowing each other’s real-time positions. If each agent independently chooses the shortest path, they will collide. However, with shared knowledge of each other’s goals and an understanding of common conventions, they can avoid collisions and navigate efficiently.

Background

Multi-Agent Reinforcement Learning

Multi-Agent Reinforcement Learning (MARL) involves training multiple agents to make decisions that maximize a cumulative reward. This is often modeled using Decentralized Partially Observable Markov Decision Processes (DEC-POMDPs). These processes account for the fact that agents have only partial information about the environment and must make decisions based on this limited view. Traditional MARL approaches face significant challenges when communication is restricted or when sensing is limited.

Figure 1: (a) An example instance of a cooperative navigation problem and (b) a solution for the problem instance.

Figure 1: (a) An example instance of a cooperative navigation problem and (b) a solution for the problem instance.

Epistemic Logic

Epistemic logic deals with reasoning about knowledge and beliefs. It allows agents to estimate the knowledge of other agents and predict their actions based on this estimation. By incorporating epistemic logic into MARL, we enable agents to infer unobservable parts of the environment, thereby making more informed decisions.

Reinforcement Learning with Epistemic Priors

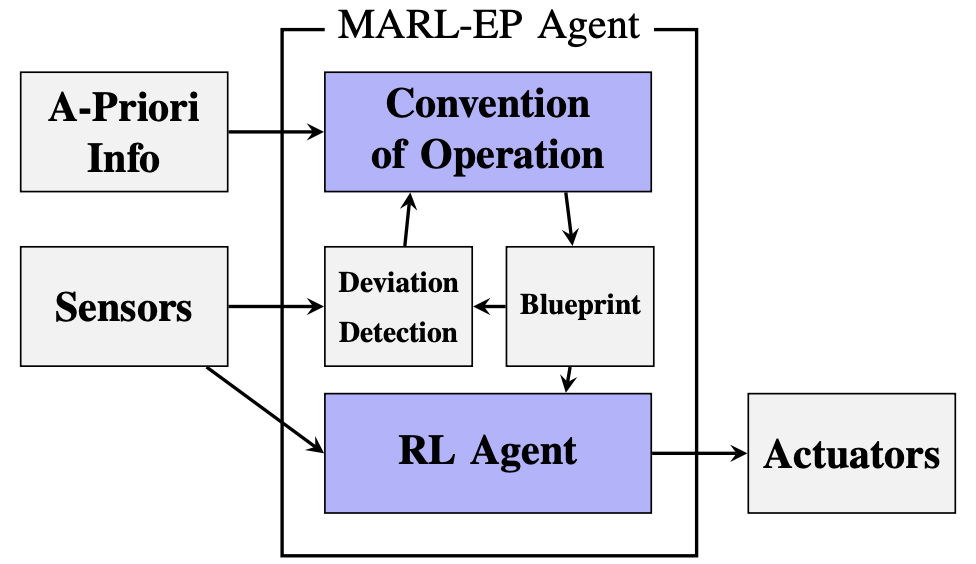

Our approach, MARL-EP, integrates epistemic priors into the decision-making process of each agent. These priors serve as a shared mental model, helping agents infer the unobservable parts of the environment and coordinate their actions.

Convention of Operation

A convention of operation is a set of predefined rules or protocols that guide agents’ actions to achieve coordination. For example, in traffic systems, the convention might be to drive on the right side of the road. By following such conventions, agents can predict each other’s actions even without direct communication.

Epistemic Blueprints

In the MARL-EP architecture, each agent uses a deterministic multi-agent planner to generate an epistemic blueprint, a complete multi-agent plan. This blueprint guides the agent’s actions, assuming that all agents have identical plans and follow the same conventions. This method allows agents to coordinate implicitly, leveraging shared knowledge and conventions to achieve their goals.

Multi-Agent RL with Epistemic Priors

We employ the QMIX algorithm, which decomposes the global value function into local value functions for each agent, making the learning process more efficient. In our modified approach, we incorporate epistemic priors into the training process. These priors enhance the agents’ understanding of the global state, improving coordination and overall performance.

Figure 2: MARL-EP System Architecture.

Figure 2: MARL-EP System Architecture.

Algorithm

Here’s a simplified version of our algorithm:

- Initialize the parameters for the mixing network, agent networks, and hypernetwork.

- Estimate epistemic priors for each agent at the beginning of each episode.

- Train agents using local observations augmented with these epistemic priors.

- Update model parameters iteratively based on the observed rewards and transitions.

Experimental Results

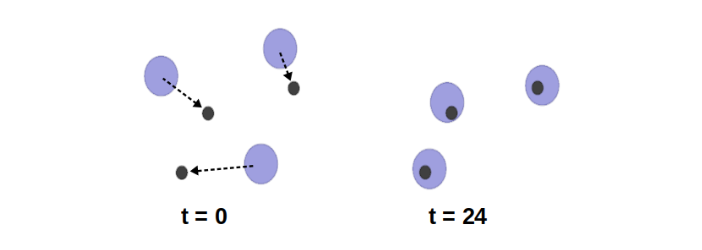

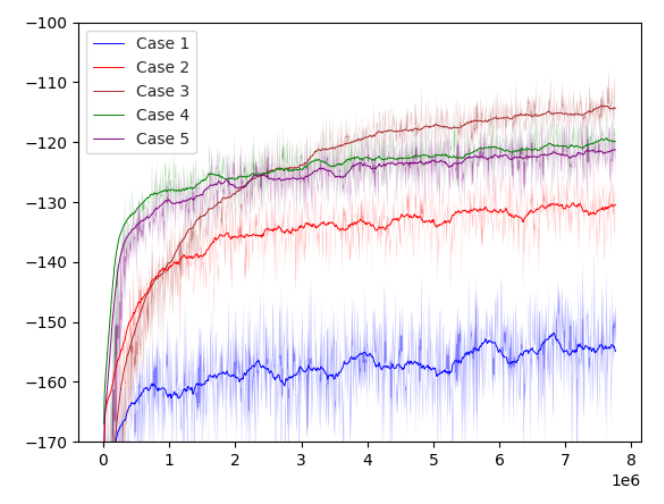

We validated our approach using the Simple-Spread task in the Multi-agent Particle Environment (MPE). This task involves multiple agents cooperating to reach specific landmarks while avoiding collisions. We tested five different scenarios to evaluate the performance of our method:

- No sensing (baseline): Agents have no access to other agents’ locations.

- Limited sensing: Agents can sense nearby agents.

- Perfect sensing: Agents know the locations of all other agents.

- No sensing, with priors (QMIX-EP): Similar to baseline but with estimated locations of other agents.

- Limited sensing, with priors (QMIX-EP): Limited sensing augmented with estimated locations.

Figure 3: MPE: Simple-spread task. Three agents cooperate to reach the three landmarks as quick as possible, while avoiding collisions.

Figure 3: MPE: Simple-spread task. Three agents cooperate to reach the three landmarks as quick as possible, while avoiding collisions.

Results

The results, depicted in Figure 4 of our paper, show significant improvements in performance with the use of epistemic priors. In scenarios with no or limited sensing, MARL-EP achieved performance levels close to those with perfect sensing. This demonstrates the effectiveness of using epistemic priors for enhancing coordination among agents.

Figure 4: Evaluation of trained QMIX agents for different cases.

Figure 4: Evaluation of trained QMIX agents for different cases.

Conclusions

Our study demonstrates that integrating epistemic priors into multi-agent reinforcement learning can significantly enhance coordination in environments with limited sensing and communication. By leveraging shared mental models and conventions of operation, agents can infer the actions of others and achieve high levels of coordination without direct communication.

Future work will focus on applying this approach in real-world scenarios and exploring real-time updates to the epistemic blueprints. We believe that MARL-EP holds great potential for advancing the capabilities of autonomous multi-agent systems in various applications.

For those interested in the technical details and further results, we encourage you to read our full paper presented at CoDIT 2023.

Citation

1 | @inproceedings{ |