Minkyu Choi, Max Filter, Kevin, Alcedo, Thayne T. Walker, David Rosenbluth, and Jaime S. Ide.

International Conference on Unmanned Aircraft Systems (ICUAS), 2022

The rapid evolution in autonomous unmanned aerial vehicles (UAVs) technology has spurred significant advancements in their control systems. A prominent challenge in this domain is balancing the agility of Proportional-Integral-Derivative (PID) systems for low-level control with the adaptability of Deep Reinforcement Learning (DRL) for navigation through complex environments. This post delves into a novel approach that combines these technologies to improve the retraining efficiency of UAV controllers using Soft Actor-Critic (SAC) with inhibitory networks.

Introduction to UAV Control Systems

Autonomous UAVs benefit immensely from DRL due to its ability to handle nonlinear airflow effects caused by multiple rotors and to operate in uncertain environments such as those with wind and obstacles. Traditional DRL methods have shown success in training efficient UAV navigation systems. However, real-world applications often necessitate retraining these systems to adapt to new, more challenging tasks.

The Challenge of Retraining in DRL

One significant issue with traditional DRL algorithms like SAC is catastrophic forgetting, where previously learned skills are lost when the system is retrained for new tasks. This problem is especially pronounced in dynamic environments where UAVs must quickly adapt to new challenges without losing their established navigation capabilities.

Introducing Soft Actor-Critic with Inhibitory Networks (SAC-I)

Inspired by mechanisms in cognitive neuroscience, the proposed SAC-I approach addresses this retraining challenge. Inhibitory control in neuroscience refers to the brain’s ability to modify ongoing actions in response to changing task demands. Similarly, SAC-I utilizes separate and adaptive state value evaluations along with distinct automatic entropy tuning.

Key Components of SAC-I

- Multiple Value Functions: SAC-I employs multiple value functions that operate in a state-dependent manner. This allows the system to retain knowledge of familiar situations while adapting to new ones without forgetting previously learned skills.

- Inhibitory Networks: An additional value network, termed the inhibitory network, is introduced. This network specifically learns new evaluations required for novel tasks, thus preventing interference with the previously learned value network.

- Dual Entropy Estimation: The approach also includes estimating two distinct entropy parameters to manage the exploration-exploitation trade-off more effectively during retraining.

Experimental Validation

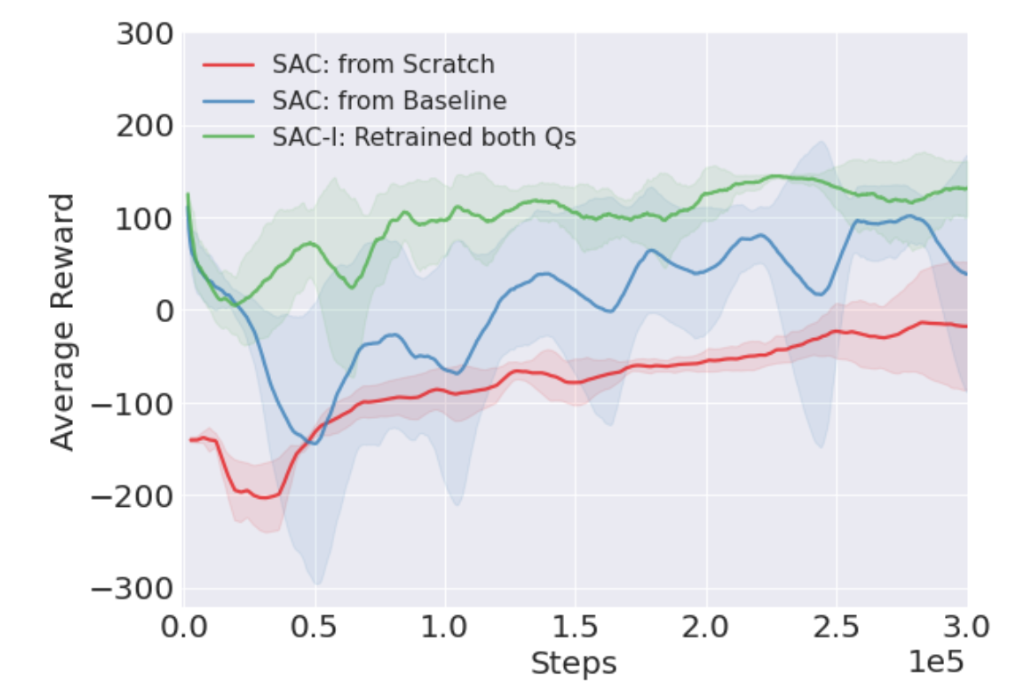

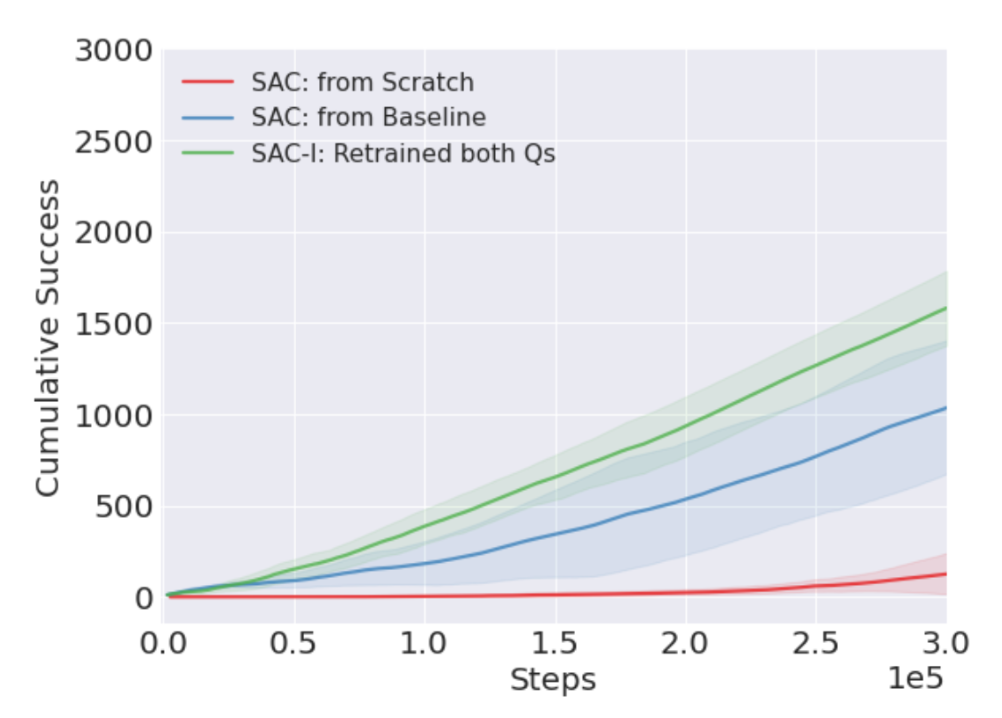

The efficacy of SAC-I was validated through experiments using a simulated quadcopter in a high-fidelity environment. The results demonstrated that SAC-I significantly accelerates the retraining process compared to standard SAC methods. Key metrics such as sample efficiency and cumulative success rates were used to benchmark the performance.

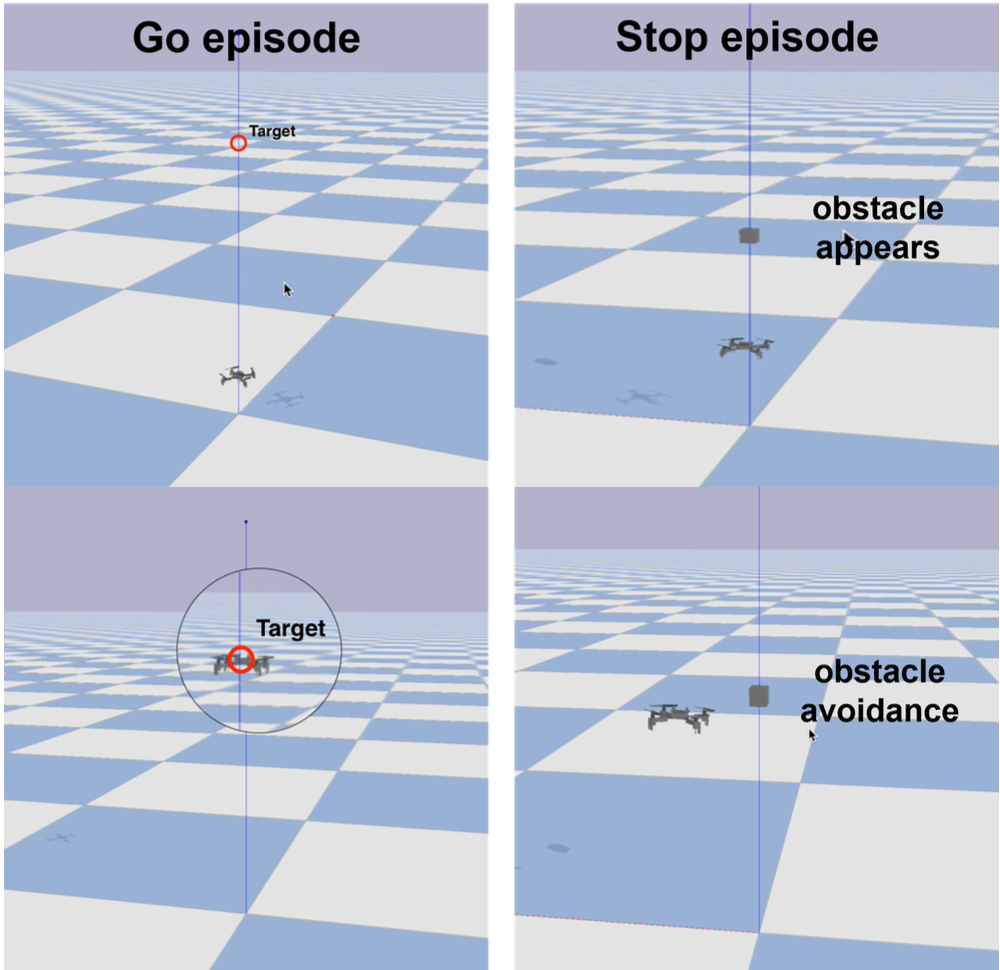

Figure 1: Takeoff-Target-Aviary-v0 task with obstacle. Go episode: agent starts at (0,0,0.11) and it has to reach and hover around target point at (0, 0, 0.7). Stop episode: obstacle appears randomly after the episode starts, and agent has to avoid it and reach the target.

Figure 1: Takeoff-Target-Aviary-v0 task with obstacle. Go episode: agent starts at (0,0,0.11) and it has to reach and hover around target point at (0, 0, 0.7). Stop episode: obstacle appears randomly after the episode starts, and agent has to avoid it and reach the target.

Results Summary

- Faster Retraining: SAC-I agents showed a remarkable reduction in the time required for retraining, achieving new task proficiency up to five times faster than standard SAC agents.

- Improved Sample Efficiency: The novel approach maintained high levels of sample efficiency, crucial for practical applications where real-world data collection is expensive and time-consuming.

Figure 2: Average reward during agents training.

Figure 2: Average reward during agents training.

Figure 3: Cumulative success during agents training.

Figure 3: Cumulative success during agents training.

Conclusion

SAC-I presents a groundbreaking advancement in the retraining of UAV controllers. By leveraging inhibitory networks inspired by cognitive control mechanisms, this approach ensures rapid adaptation to new tasks while preserving established skills. This innovation holds significant promise for real-world UAV applications, where quick adaptation to changing environments is critical.

Future Directions

Further research will focus on extending this approach to other DRL algorithms and applying it to more complex and dynamic UAV tasks. Additionally, improving the auto-tuning methods for entropy parameters will enhance the stability and performance of SAC-I in various applications.

Citation

1 | @INPROCEEDINGS{9836052, |